AI companions are changing how we connect emotionally. Apps like Replika, Character.ai, and ChatGPT-based ones provide personalized chats, warmth, and support. Is using these AI friends crossing a line into emotional cheating? This is a key question for readers in the U.S.

Anúncios

We’ll clarify some terms first. A ‘virtual boyfriend’ is an AI that offers romantic attention. A ‘situationship with AI’ means having an ongoing, emotional, but undefined connection. ‘AI companion’ includes chatbots and apps for comfort or love. ‘Emotional infidelity’ is when someone is intimately involved outside their real relationship, without their partner knowing. We’ll discuss these more as we go.

This article looks at the psychology and ethics of these AI relationships. We’re using insights from therapists, relationship experts, and studies on how people connect online. We’ll look at examples from AI apps like Replika and Woebot, mixing science with real app experiences to give a complete picture.

You’ll learn signs of when AI use might be a problem, hear from experts, and find out how to draw lines. We’ll also talk about the ethics of these AI relationships for both creators and users. This way, you can figure out what counts as harmless fun versus emotional cheating.

Key Takeaways

- AI companions can feel emotionally real, raising new questions about fidelity.

- Definitions matter: virtual boyfriend, situationship with AI, and emotional infidelity are distinct but overlapping concepts.

- Therapists and researchers offer differing views; context and intention shape whether a bond is harmful.

- Practical boundaries and open communication with partners reduce the risk of betrayal.

- Developers and users share responsibility for ethical virtual relationship design and use.

Understanding Virtual Boyfriends and Emotional Boundaries

Anúncios

Virtual friends are becoming more popular. This part talks about what they are and why people choose them, stressing the importance of emotional boundaries.

What is a virtual boyfriend?

A virtual boyfriend is an AI-based chatbot or avatar. It acts like a partner through messages, voice, or images. Sites like Replika and Character.ai let people customize how these boyfriends talk and what they talk about. These systems use smart tech to understand and respond in human-like ways.

How virtual relationships differ from human relationships

One big difference is that AI doesn’t actually think or feel. It uses code to respond, not real emotions. This makes the relationship feel different.

You can change how a virtual boyfriend acts, which isn’t possible with real people. This can make the AI seem more perfect than it is.

There are also privacy worries. Your talks with a virtual partner might be saved or used in ways you didn’t expect, unlike talks with real people.

Common reasons people seek virtual partners

- Feeling lonely or cut off makes some look for constant digital company.

- Others practice talking to dates or work on feeling more confident with less risk.

- The 24/7 availability and the chance to avoid rejection draw many people in.

- Digital buddies can also offer support for mental health or emotional comfort.

- The age and tech comfort of users also influence their interest in digital companions.

Talking about virtual boyfriends leads to a focus on emotional boundaries. It’s key to know where you stand and stay cautious about your data.

Signs That a Virtual Relationship Might Cross Emotional Infidelity Lines

Starting a virtual connection might seem safe at first. Quickly, friendly chats with either a chatbot or an AI buddy can grow significant. If you notice changes in behavior, secret-keeping, or if the digital friend becomes a top emotional concern, this might mean casual fun has evolved into something deeper.

Emotional investment and secrecy

If someone starts to hide their chats or lies about how much time they spend online, it’s a warning sign. Being secretive about their digital interactions or getting defensive when questioned can mean they’re growing too attached to an app or bot.

It’s also concerning when someone shares personal issues or looks for comfort mostly from a bot instead of a real person. Recent studies link these actions and the secrecy around AI interactions to feeling betrayed by a partner.

Comparisons with in-person emotional affairs

Digital and real-world emotional affairs share several features like emotional closeness without being physically intimate, shifting affection away from the partner, and trust issues. These elements affect how betrayal is seen in relationships.

AI doesn’t have the ability to choose or act with purpose. A virtual companion can’t intend to cheat. However, it’s the person who decides to interact with it, adding complexity to how people view these situations and sparking discussions among couples and therapists.

Impact on time, attention, and priorities

Signs of trouble include spending a lot of time chatting online, ignoring shared activities, or skipping responsibilities. Staying up late for messages and constantly checking for updates are obvious signs.

Thinking a lot about these online talks or often going over them in your head points towards being too involved. A jump in how much they use their phone or more time spent on profiles of virtual companions are signs similar to addictive digital behavior.

- Watch for secrecy and AI companions becoming a substitute for in-person support.

- Note virtual affair signs like defensive responses and frequent hidden sessions.

- Track time spent on virtual boyfriend interactions; sudden increases can signal shifting priorities.

Situationship with AI: Is Having a Virtual Boyfriend Emotionally Infidelity?

As AI companions become more realistic, new relationship questions emerge. This section explains terms, presents both sides of the debate, and gives clinical views. This helps readers decide what feels like a violation of trust.

Definitions: situationship, AI companion, and emotional infidelity

A situationship is when two people are romantically involved without clear commitment. An AI companion is software or an app that can simulate conversation and emotional support. It’s sometimes called a virtual boyfriend. Emotional infidelity is when someone forms a deep emotional connection or secretive bond outside the relationship’s agreed boundaries.

Arguments that support labeling it emotional infidelity

-

If someone shares private feelings and reliance on an AI and keeps it a secret, it’s like a human affair. This can hurt the other person.

-

Some partners feel jealousy and replaced when they find out about the AI intimacy. This shows it can have similar effects to a human affair.

-

In a situationship with AI, moving emotional support to a virtual boyfriend can weaken trust. It can also lower the couple’s expectations.

Arguments that oppose labeling it emotional infidelity

-

AI can’t think or truly give back, so some say it’s different from being involved with another person.

-

Some use an AI companion for practice, to lessen loneliness, or to cope. They don’t mean for it to be romantic.

-

When partners talk about using AI, or if no emotional promises are broken, calling it cheating may not be right.

Perspectives from therapists and relationship experts

Clinicians look at how behaviors affect relationships. If a virtual boyfriend leads to secrets, shifts emotions, or hurts closeness, therapists see it as an affair. They advise talking about it in therapy.

Social scientists can’t agree on what these connections mean in the long run. They’re studying how emotional affairs and AI relationships change what couples expect from each other.

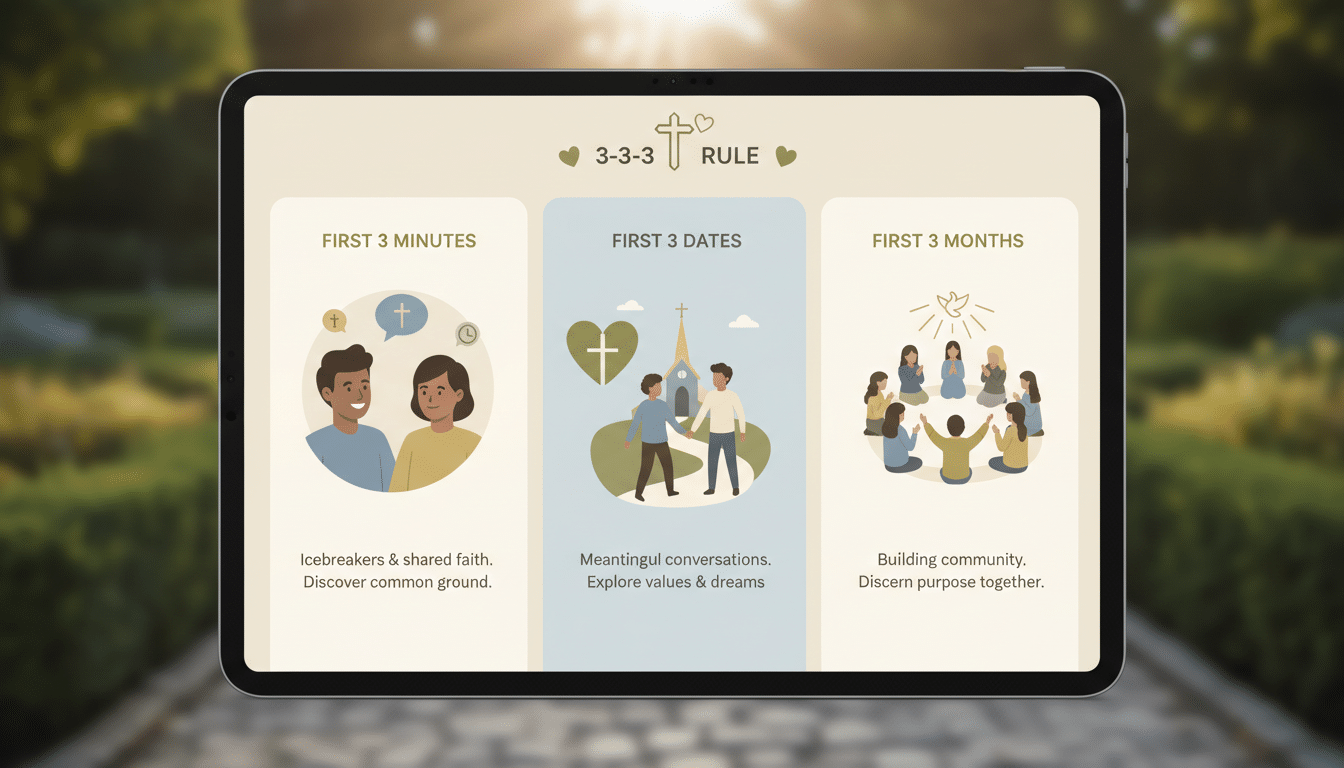

Experts suggest four key questions: Was it secret? Did it replace real connection? Was emotional effort moved to the AI? Did the partner agree? Couple’s therapy helps explore these without jumping to conclusions.

Psychological and Social Impacts of Virtual Relationships

Virtual relationships can offer comfort quickly and make us wonder about our feelings and actions. They provide a short-term break from loneliness when a chatbot or app responds well. At the same time, experts are studying how these online connections affect our lives in the long run.

Effects on mental health and loneliness

Some apps, like Woebot, help reduce anxiety and depression. They offer thinking tools and regular check-ins. This shows that AI friends can have a good impact on mental health with the right design.

Fun chatbots can make people feel less lonely and practice social skills. This boost in confidence might help someone get back into social situations with real people. However, depending too much on fake empathy might make avoiding people easier. When virtual chats take the place of real support, feelings of depression might get worse.

Research is showing mixed results. Some small studies are hopeful, showing less symptoms. But larger studies warn about possible negative effects. Doctors need to carefully think about the trade-off between quick relief and avoiding real-life help.

Impact on real-world relationships and intimacy

Spending too much time and emotion on AI can lead to less attention for loved ones. This might harm everyday things like talking openly or solving problems together.

AI’s always kind and simple answers can be very comforting. This might make people less willing to deal with the normal ups and downs of relationships. Some people might find real-life relationships harder if they rely too much on AI for support.

Some early studies show that hiding the use of companion apps can make relationships worse and cause more fights. Relationship experts think it’s important to ask about these virtual friendships to understand their impact on closeness and trust.

Attachment styles and susceptibility to AI companionship

Attachment theory gives us insight into who might turn to AI friends. For example, people who need a lot of reassurance might find what they need from a bot instantly. This can reinforce their anxious attachment style.

People who want to avoid getting too close might prefer AI. It’s less risky and feels safer than dealing with complicated human interactions. Fear of getting hurt and past bad experiences can make AI seem more attractive.

Therapists might look at how attachment styles and AI use go together. They might then offer support to help people find better ways to cope and improve their real-life relationships.

- Potential benefits: short-term reduction in loneliness, mood regulation, and social rehearsal that may boost confidence.

- Potential harms: reinforcement of avoidance, reduced motivation for human contact, and possible worsening of depressive cycles.

- Clinical actions: screen for attachment patterns, assess displacement in relationships, and integrate technology-aware interventions.

Practical Guidelines: Boundaries, Communication, and Ethics

Using virtual partners wisely means setting clear boundaries and expectations. Practical rules for AI services like Replika or ChatGPT help prevent conflicts in relationships.

Setting healthy boundaries with AI companions

- Limit time of use: set daily or weekly caps and stick to them.

- Designate device-free times: meals, bedtime, and date nights should be off-limits.

- Avoid using AI for core emotional support that belongs to your partner.

- Keep interactions transparent: allow partners to see logs or summaries when agreed.

- Use self-monitoring tools: app trackers, scheduled check-ins, and reflection journals can reveal when AI use feels compulsive.

How to discuss virtual relationships with partners

- Prepare a calm, nondefensive conversation that focuses on behaviors and feelings.

- Describe specific actions: time spent, secrecy, or changes in intimacy rather than making accusations.

- Name emotions clearly: neglect, confusion, or jealousy help partners understand impact.

- Invite their perspective and ask what makes them comfortable.

- Negotiate consent and agreements about what counts as acceptable use, privacy expectations, and transparency rules.

- If disagreements persist, consider couple’s therapy or mediated conversations with a licensed clinician to rebuild trust.

Ethical considerations for developers and users

- Designers must be transparent about data use and provide clear consent flows.

- Implement safety protocols for vulnerable users and avoid manipulative mechanics that encourage addiction.

- Protect stored conversations with robust encryption and give users control over deletion.

- Platforms should avoid positioning AI as a substitute for professional mental health care and include referral pathways to human services.

- Consider whether industry standards should require disclosures about emotional simulation and targeted marketing to vulnerable groups.

Keep the conversation about AI companions open as relationships and technologies evolve. Discussing virtual relationship issues should focus on shared values and consent. A balance of practical rules and ethical awareness prevents harm to real-world connections.

Conclusion

This conclusion focuses on behavior and its effects. Secret acts, emotional shift, or crossing agreed lines can lead to emotional unfaithfulness. This brief shows the importance of trust, time, and emotional effort in real relationships when using AI.

However, AI relationships can also be helpful. They offer comfort from loneliness, help improve social skills, and give short-term support. This final thought is impartial: while AI has its perks, it can harm intimacy and mental well-being if it takes over or is kept secret from partners.

Taking practical steps is easy and direct. Check why you’re using AI and how you connect with it. Be open with your partners and set clear rules. If AI use is hurtful, getting help from a professional might be a good idea. As tech advances, both relationships and AI development should focus on agreement, openness, and moral creation to maintain healthy connections.

Content created with the help of Artificial Intelligence.